An important metric to call out is Watermark Delay. The values represented in the metric indicate the realized processing delay of the event message. Under normal operation the Watermark Delay metric should be zero. There are numerous reasons why a watermark delay is happening, such as a clock skew on one of the nodes, not enough compute resources, or the out‐of‐order range. The clock skew would be resolved by the platform, so there is no action you can take on this one. An Azure Stream Analytics job that has a watermark delay might need more compute power, which is managed by the number of allocated streaming units. The out‐of‐order range you saw in Figure 7.43 can cause a watermark delay because of the adjustment made to the watermark. As you saw in Figure 7.41, the event message with sequence six has a watermark that is significantly different when compared to the other event messages. Sequence six is considered a late‐arriving message, but messages can also arrive early for many of the same reasons they arrive late. When messages arrive early, which means the event time is greater than the arrival time, it means the watermark will be older than the event time too. This is a problem if there is a restart of the Azure Stream Analytics job. Reasons for restarting were discussed earlier. One of the reasons is that you manually stopped the job and now want to start it again from the time it was last stopped (refer to Figure 7.18). To make sure no event messages are missed, messages are taken from the time of stoppage plus the configured time window. Since the early‐arriving event messages will have a watermark greater than the time window, they will be dropped and won’t be processed, but they will be incremented on the Early Input Events metric, which you can find by selecting the See All Metrics link next to the Key Metrics header in Figure 7.44.

Replay Archived Stream Data

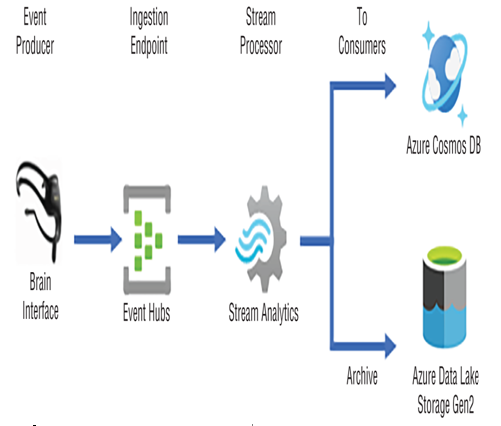

When a disruption to your data stream processing occurs in Azure Stream Analytics, as you learned in the last section, the platform uses checkpoints and watermarks to recover. The recovery consists of processing any lost events and catching up to any queued event messages. There is currently no built‐in archive of your data stream events. This means that, if you want it, you need to create it yourself. You might be surprised to read that an exercise you already completed in many ways created an archived set of data that can be used to replay the stream, if required. In Exercise 7.9, you wrote the output of your Azure Stream Analytics query to two different data storage products. The same approach is useful for writing an archive, as long as the data written can be used by an application to replay (resend) the data to the ingestion point. Consider Figure 7.45, which shows such an archival solution.

FIGURE 7.45 An archived data stream solution

The solution begins with an event producer that sends data messages to an Azure Event Hubs endpoint. Azure Stream Analytics has subscribed to be notified of messages that are ingested into the endpoint message partition. The subscription was achieved by configuring Event Hubs as an input alias for the Azure Stream Analytics job. The data stream messages are retrieved from the Event Hubs partition and processed. Once the messages are processed, the data is stored in the data storage and configured as outputs used in the query’s INTO statement. That scenario is exactly what you have achieved over the course of the exercises in this chapter. To replay a data stream using the data that was stored in your ADLS container, perform Exercise 7.10.